In an ideal world, I think we’d all prefer faculty-graded, free-response style exams. Unfortunately, that’s just not really realistic for a chemistry professor teaching large service classes.[1. The largest classes I’ve taught have up to about 200 students.] While we do use online homework for the classes I teach, we’re not really set up to use these sorts of systems as a primary assessment tool. That leaves, of course, machine-graded, multiple choice (MC) as the go-to format for most (but not all) of my exam questions.

Truthfully, I do think that MC gets a bad rap sometimes. The problem with a lot of the MC questions I’ve seen (e.g., in textbook exam banks) is not the format, it’s that they’re shallow. Bad MC questions tend to be one-note; great questions are like chords, regardless of format.

Free-response questions are also, of course, not without their problems. We have TA support for organic exam grading here at Miami. However, I’ve never been all that happy with the results. This isn’t a slight against our TAs. They’re doing their best, but it’s just not that easy to get six different people to grade in exactly the same way. We sometimes try to compensate by designing questions with unambiguous answers and various contrivances to railroad the answers into a consistent form.[2. Partially drawn structures,fill-in boxes all over the place, etc.] At some point, though, you really have to ask yourself whether the free-response you’ve produced is any more “free” than the MC version would have been.

Which is all to say that, like many other chemistry professors, I feel stuck with MC until I can get my hands on an exam room with many computers.

Anyone’s who’s taught (or taken) one of these courses would probably agree that a major problem with MC is that it’s merciless. We’ve all been sitting across from students who almost got every question right, but ultimately got them all wrong. This may be fair, but it’s not at all satisfying since everyone involved knows that if the same questions had been free-response then partial credit would have been awarded.

Partial credit multiple choice is not at all a new idea. The problem from my end was more technical. We use fill-in-the-bubble Scantron forms here at Miami. It may very well be that other universities have put in place partial credit scoring systems at their test scoring facility, but at least here the only option is the usual one-right-answer key.

However, we are sent the raw output from the Scantron scanner along with the grades as a plain text file. As it happens, a while back I was trying to brush up on my Programming 101 skills. So I put together a little Python script that takes raw Scantron output, a grading key, and (if needed) a scramble form and outputs the student scores and a bunch of statistics about each question. It lets a completely arbitrary key be applied, so partial credit can be awarded, different questions can have different values,[2. Not that I’ve ever actually assigned different values to different questions.] questions can be removed entirely, etc.

- Download the script: grade_mc_2.py

- Edit 1/17/2015: The script is now posted in a GitHub repo. Future versions will be found there.

If you’re familiar with Python, you could probably put this together over lunch. If you haven’t already done so and you’re interested in trying it out, maybe this script will save you a little time. I’ve tried to be thorough in commenting, and there’s not a lot to it. If nothing else you can laugh at the style.

If you’re not familiar with Python, I’ll run through how to actually use this script in my next post.

In later posts, I plan to go through this little program in some detail in the hopes that it can be customized to other folks’ situations (i.e., if the Scantron output is in a different format). I’m also currently working on a related program that interprets scanned images of bubble sheets and extracts the responses into the right format, but that’s still very much a work in progress.

What this has meant for my courses

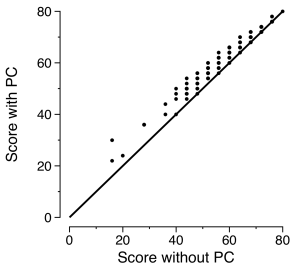

When you get right down to it, giving partial credit like this is a way to curve exam grades; it’s just a bit more nuanced than a lot of other methods in that it’s at least trying to reward right answers.[3. Before this, I traditionally would lower the point total when I wanted to curve exams. Basically, the logic was that I was effectively making one or two of the hard questions bonus questions. The problem is that this rewards the strong students more than the weaker students.] Here’s a plot of the results from the first time we used this system. As you can see, some students in the middle of the pack really benefit from the added credit, gaining up to 10 points out of 80. So, there can be a huge differentiation between students who would have otherwise gotten the same grade. Of course, whether this is a meaningful or warranted differentiation is a different story.

Comparison of multiple-choice grades with and without a partial-credit key applied. The test was out of 80 points (4 points per question). Partial credit answers were given 2 points. The line is just the 1:1 match.

Mostly what this has meant is that it’s freed me to come up with many more challenging, literature-based, satisfying questions to include on my exams, knowing that the questions will still have merit even if the correct answer isn’t chosen by the majority of the class.